Archive for 2009|Yearly archive page

Complexity

In Virtualized Data center on February 16, 2009 at 10:59 pm

I recently came across Benjamin Black’s blog on complexity in the context of AWS. He says:

i now see complexity moving up the stack as merely an effect of complexity budgets. like anything worth knowing, complexity budgets are simple: complexity has a cost, like any other resource, and we can’t expect an infinite budget.

spending our complexity budget wisely means investing it in the areas where it brings the most benefit (the most leverage, if you must), sometimes immediately, sometimes only once a system grows, and not spending it on things unessential to our goals.

What drives design complexity, in the Cloud computing infrastructure space?

Finding the right mix of functional “differentiation” versus “integration” at all levels or tiers of the design (whether it is hardware or software), along with technology & business constraints drive “complexity budget”.

“Differentiation” at the functional level is pretty well understood, but evolving: Routers, Switches, Compute, Storage nodes although the very basis of how these functions are realized is changing (e.g. Cisco is supposed to be gearing to sell servers).

“Integration” of various infrastructure functions in the data center, is always a non-trivial system (integration) expense.

Some Technology constraints examples:

- Right from the chip level to system level, energy efficiency improvements are much slower than, hardware density improvements. Consequently, not being able to consume power in proportion to utilization levels result in sub-optimal cost/pricing structures.

- Context/environment for virtualization for Cloud providers is really around defining what it means to have a “virtualized data center”. Cisco’s Unified computing being one example.

- Workload characterization & technology mapping

Business constraints: typically, learning curve/market adoption, cost and time-to-market.

The need to produce manageable systems, the imperatives of interdependencies from the chip level to board, system level, software and ultimately the data center level requires us to resort to holistic, iterative thinking & design: We have to consider the function, interaction at all levels, in the context of the containing environment, i.e. what it means to have a “fully virtualized” data center.

Ironically, Virtualization gets more attention than “Reconfigurability” needed (in response to virtualization, workload & utilization variations) at all levels: compute, storage, interconnect, power/cooling, perhaps even topology with in the data center.

After all, Cloud providers would want:

- “proportional power consumption” at the data center level

- reduced system integration costs with fully integrated (virtualization aware) compute, storage & interconnect stack

- optimal cloud computing operations, even for non-virtualized environments (lets face it, there are tons of scenarios that don’t necessarily need virtualization; by the same token, there are virtualization solutions that enable better utilization but don’t require hypervisors).

- complete automation of managed IT environments

This is all about moving the complexity away from IT customers (adopting the cloud computing model), and in to the data centers/cloud providers.

Java Observability, Manageability in the Cloud

In API, Manageability on January 30, 2009 at 10:14 pmHave you done this fire-drill: you have a high traffic/volume web application, it is sluggish or unstable. Your team is called to figure out if the problem is in the application, middleware stack, operating system or somewhere else in the deployment configuration??

This is where Observability comes in: Being able to dynamically probe resource usage (across all levels of the infrastructure/application stack) at a granular level, control probing overheads and associating actions or triggers with those probes at all levels.At a system level, DTrace is a good example, on Solaris & Mac OS X. There’s even a Java API for DTrace. Of course, there are other profiling libraries that offer low over-head, extremely fine-grained probes, aggregation capabilities (e.g. JETM).

Manageability is another important consideration: Being able to control and manage applications via standard management systems. This involves (hopefully) consistent instrumentation mechanisms, and standard isolation mechanisms between IT resources being managed, and external management systems. JMX is an example of one such standard. Other options such as JMX to SNMP bridge, MIBs compiled to MXBeans are some methods of integrating managed resources in to higher level (management) frameworks.

Flexible binding of computing infrastructure to workloads, is a key value proposition of the Cloud Computing model. Cloud computing providers like Amazon serve the needs of typical web workloads, by providing access to their dynamic infrastructure.

The problem is workload diversity. Vendors like Amazon, Google essentially offer Distributed computing workload platforms. Imagine tracing application performance issues on a distributed platform!

So, why is Observability and Manageability more important in the Cloud?

Because consumption of a fixed resource like CPU hrs is not optimal when you are on a “elastic”/dynamic infrastructure. You’d want to pay for exact utilization levels.

Because you want monitoring arrangements that work seamlessly, as they do on a single system, but on top of “elastic”/dynamic infrastructure.

There are many other reasons, of course:

Being able to manage the lifecycle of applications in an automated manner, in a distributed computing environment is perhaps the biggest use case for Manageability in the Cloud.

Metering, Billing, Performance monitoring, Sizing and Capacity planning are some examples of activities in the Cloud computing model that leverage the same underlying Observability principles (Instrumentation, dynamic probes, support for aggregating metrics etc).

Lets look at a couple of examples of what Observability and Manageability capabilities can enable in the Cloud computing context:

- Customers can pay at a more granular level of Throughput levels (Requests or Transactions processed per unit of time) for a given latency (and other SLA items), so your charge back model makes sense, in the context of your business activities.

- Enable better business opportunities, in a cost-effective manner. e.g. Mashery focuses metering/instrumentation at the API level, but the proposition in this context: Open up your API’s, meter it to provide you with an automated “business development filtering” mechanism….attractive if you’re in the right business, even on the “long tail” i.e customer traffic/volume is not high, but at least you have an ecosystem (100’s of developers or partners) to support the long tail, without burning your bank account. See my earlier post on RESTful business for more context here…these considerations are more valid in the Cloud computing paradigm.

- Ensuring DoS style attacks don’t give you a heart attack (because your elastic cloud racked up a huge bill)

Observability and Manageability are key “infrastructure” capabilities in the Cloud computing model that enable features/value proposition such as the ones discussed above. These are not new ideas, but adaption of time tested ideas to a new computing paradigm (i.e predominantly distributed computing over adaptive/dynamic infrastructure, but other variations will crop up).

What color is your Cloud fabric ?

In Business models, Cloud Computing, Use Cases on January 15, 2009 at 9:30 pmCloud Fabric is a phrase that creates the notion of myriad complex capabilities, and unique features depending on the vendor you talk to. Here, I take the wabi-sabi approach of simplifying things down to essentials, and then try to make sense of all the different variations from vendors, with in a simple, high-level framework or mental model.

Before we go in to details, a caveat: Different types of Cloud fabric discussed in this framework here, is always provisional, in the sense that technological improvements (e.g. Intel already has a research project with 80 cores on a single chip) will influence this space in significant ways.

Back to the topic: Loosely defined, Cloud fabric is a term used to describe the abstraction for the Cloud platform support. It should cover all aspects of the life-cycle and (automated) operational model for Cloud computing. While there are many different flavors of Cloud fabric from vendors, it is important to keep in mind the general context that drives the features in to the Cloud fabric: The “Cloud fabric” must support, as transparently as possible, the binding of an increasing variety of Workloads to (varying degrees of )Dynamic infrastructure. Of course, vendors may specialize in fabric support for specific types of Workloads, and with limited or no support for Dynamic infrastructure. By Dynamic infrastructure, I mean that it is not only “elastic”, but also “adaptive” to your deployment optimization needs.

That means your compute, storage and even networking capacity is sufficiently “virtualized” and “abstracted” that elasticity and adaptiveness directly address application workload needs (as transparently as possible — for example is the PaaS or IaaS provider on converged data center networks or do they still have i/o and storage networks in parallel universes?). If VM’s running your application components can migrate, so should storage, and interconnection topologies (firewall, proxies, load balancer rules etc) , seamlessly – and from a customer perspective, “transparently”.

Somewhere all of this virtualization meets the “un-virtualized” world!

Whether you are a PaaS/IaaS, “Cloud-center” or “Virtual private Data center” provider etc, variations in the Cloud fabric capabilities stem from the degree of support across both these dimensions:

- Breadth of Workload categories that can be supported. E.g. AWS and GoogleApp platform support is geared towards workloads that fit the Shared-nothing, distributed computing model based on Horizontal scalability using OSS, commodity servers and (mostly) generic switch network infrastructure. Keep in mind, Workload characterization is an active research area, so I won’t pretend to boil this ocean (because I am not an expert by any stretch of imagination), but just represent the broad areas that seem to be driving the Cloud business models.

- How “Dynamic” is the Cloud infrastructure layer, in supporting the Workload categories above: Hardware capacity, configuration and general end-end topology of the deployment infrastructure matters, when it comes to performance. No single Cloud Fabric can accommodate the performance needs of any type of Workload, in a transparent manner. E.g. I wouldn’t try to dynamically provision vm’s for my Online gaming service on AWS unless I can also control routing tables and multicast traffic strategy (generally, in a distributed computing architecture, you want to push the processing of state information close to where your data is. So in the case of Online gaming use case, that could mean your multi-cast packets need to reach only a limited set of nodes that are in the same “online neighborhood” as the players). Imagine provisioning vm’s, storage dynamically for such an application across your data center without any thought on deployment optimization. Without an adaptive infrastructure under the covers, an application that has worked perfectly in the past might experience serious performance issues in the Cloud environment. Today, PaaS programming and deployment model determines the Workload match.

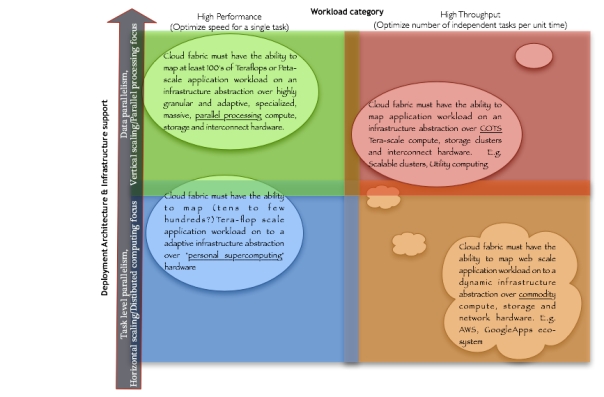

So, in general, Cloud fabric should support Transparent scaling, Metering/Billing,”Secure”, Reliable and Automated management, Fault tolerance, Deployment templates and good programming/deployment support, “adaptive” clustering etc, but specific Cloud fabric features and Use Case support depends on where your application is, at the intersection of the Workload and need for Dynamic/adaptive Infrastructure. Here’s what I mean…

Example of functional capabilities required of Cloud fabric based on Workload type and Infrastructure/deployment needs

Let me explain the above table: Y-axis represents a continuum of the deployment technologies & infrastructure used, to support “Task level parallelism” (typically, thread parallel computation where each core might be running different code) at the bottom to “Data parallelism” (typified by multiple cpu’s or cores running the same code against different data) at the top. X-axis broadly represents the 2 major Workload categories:

- High Performance workloads: where you want to speed up the processing of a single task on a parallel architecture (where you can exploit Data parallelism e.g. Rendering, Astronomical simulations etc)

- High Throughput workloads: where you want to maximize the number of independent tasks per unit time , on a distributed architecture (e.g. Web scale applications)

Where your application(s) fall in to this matrix determines what type of support you need out of your Cloud fabric. There’s room for lots of niche players, each exposing the advantages of their unique choice of the Deployment Infrastructure (how dynamic is it?), PaaS features that are friendly to your Workload.

The above diagram shows some examples. Most of what we call Cloud computing, falls in to the lower right quadrant, as many vendors are proving the viability of this business model for this type of Workload (market) . Features are still evolving.

Of course, Utility computing (top right) and “Main-frame” class, massive parallel computing (top left) capacity has always been available for hire for many, many years. What’s new here is how this can be accessed and used effectively over the internet (along with all that it entails: simple programming/deployment model, manageability, friendly Cloud fabric to help you with all of this in a variable cost model that is the promise of Cloud computing). Vendors will no doubt leverage their “Grid” management framework.

Personal HPC (bottom left) is another burgeoning area.

Many of these may not even be viable, in the market place..that will be determined by Market demand (ok, may be with some marketing too).

Hope this provides a good framework to think about the Cloud Fabric support you need, depending on where your applications fall in this continuum. I’m sure I might have missed other Workload categories, I’d be always interested in hearing your thoughts and insights, of course.

So, what color is your Cloud Fabric?

So, you want to migrate to the Cloud…

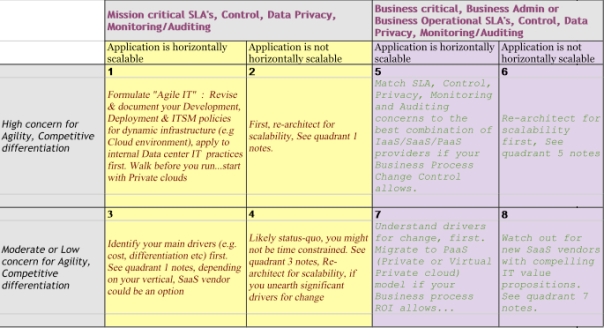

In Cloud Computing, Data center on January 1, 2009 at 2:15 amAs we head in to 2009, I can’t help thinking about how Cloud computing will affect ITSM as more companies think about leveraging the variable cost structure/dynamic infrastructure model it enables. While IT capabilities provide or enable competitive advantages (and hence require “agility” in terms of features and capabilities, systemic qualities such as Scalability), other business considerations such as IT service criticality (Is it Mission Critical?) also influence the migration strategy. Here’s one way to look at this map:

You can imagine mapping your application portfolio along these dimensions.

Many of these applications could be considered Mission Critical, depending on the business you’re in. You may even want some of these applications to be “Agile” in the sense that you want quick/constant changes or additional features or capabilities to stay ahead of your competition. The Evaluation grid above is a framework to help you quickly identify Cloud migration candidates, and priorities based on both business/economic viability as well as technical feasibility.

Step 1:

First lets talk about types of applications we deal with in the enterprise. From the perspective of the enterprise business owner, applications could fall under:

- Business infrastructure applications (generic): Email, Calendar, Instant Messaging, Productivity apps (Spreadsheets etc), Wiki’s. These are applications that every company needs, to be in business and this category of applications might not necessarily provide any competitive advantage…. you just expect this to work (i.e available, and supports a frictionless information-bonded organization).

- Business platform applications: ERP, CRM, Content and Knowledge management etc. These are key IT capabilities on which core business processes are built. These capabilities need to evolve with the business process, as enterprises identify and target new market opportunities.

- Business “vertical” applications, supporting specific business processes for your industry type. E.g. Online stores, Catalogs, Back-end services, Product life-cycle support services etc.

Once you identify applications falling in to the above categories, you can map those in to the Evaluation grid above, based on the business needs (“Agility”) versus current levels of Support (Mission Critical, Business Critical, Business Operational etc) as well as Architectural consideration (mainly Scalability).

Generally, applications falling under Quadrants 1,5 are your Tier 1 candidates for migration requiring further due diligence (RoI, they’re already scalable, so you have fewer engineering development challenges than business challenges & data privacy, IT control issues).

Applications falling in Quadrants 2, 6 require extensive preparation.

Applications falling in Quadrants 3,4,7,8 could be your “Tier 2” candidates, with those in Quadrants 3 & 4 taking higher precedence. Whether it is internal business process support or for external service delivery, primary drivers for Enterprises to consider Cloud computing are (among other things, of course):

- Cost

- Time to market considerations

- Business Agility

Step 2:

Once you map your application portfolio, you can revisit your “Agile IT” strategy by re-considering current practices w.r.t :

- ITSM: While virtualization technologies introduce a layer of complexity, deploying to a Cloud computing platform on top, requires revisiting our current Data Center/IT practices:

- Change & Configuration Management: You can’t necessarily look at this in an application specific way any more.

- Incident Management – we need automated response based on policies

- Service Provisioning – requires proactive automation (this where “elastic” computing hits the road) as in dynamic provisioning, you need to have business rules that enable automation in this area in addition to billing, metering.

- Network Management – That is “distributed” computing aware

- Disaster Recovery, Backups – how would this work in a Cloud environment?

- Security, System Management – do you understand how your current model would work in a dynamic infrastructure environment?

- Capacity planning, Procurement, Commissioning and de-commissioning – Since it is easier to provision more instances of your IT “service” on to VM’s do you have solid business rules/SLA’s driving automated provisioning policies. Does your engineering team understand how to validate their architecture (via Performance qualification for the target Cloud environment)? Is there a seamless hand-off to the Operations team, so they can tune Capacity plans based on Performance qualification inputs from Engineering?

- Revisiting Software engineering practices

- Programming model – Make sure the Engineering organization is ready across the board, in terms of both skills and attitude, to make the transition. Adopt common REST api standards & patterns, leverage good practices (remember Yahoo Pipes?), even a “cloud simulator” if possible…

- Application packaging & Installation – Make sure Engineering and Operations team agree and understand on common standards for application level packaging and installation standards regardless of the Cloud platform you adopt.

- Deployment model – Ensure you have a standard “operating environment” down to the last detail (OS/patch levels, HW configurations for each of your architecture tiers, Middle ware versions, your internal infrastructure platform software versions etc)

- Performance qualification, Infrastructure standards and templates – Focus on measuring Throughput (Requests processed per unit time) & Latency levels on your “deployment model” defined above. You need this process to ensure adequate service levels on the Cloud platform.

- How do you maintain Performance/SLA’s & Application Monitoring: Ensure you have Manageability (e.g. JMX to SNMP bridge) and Observability (e.g. JMX to DTrace bridge or just plain JMX interfaces for providing application specific Observability) built in to all the layers of your application stack at each architecture tier.

Happy migration! Let me know what your experiences are….