Cloud Fabric is a phrase that creates the notion of myriad complex capabilities, and unique features depending on the vendor you talk to. Here, I take the wabi-sabi approach of simplifying things down to essentials, and then try to make sense of all the different variations from vendors, with in a simple, high-level framework or mental model.

Before we go in to details, a caveat: Different types of Cloud fabric discussed in this framework here, is always provisional, in the sense that technological improvements (e.g. Intel already has a research project with 80 cores on a single chip) will influence this space in significant ways.

Back to the topic: Loosely defined, Cloud fabric is a term used to describe the abstraction for the Cloud platform support. It should cover all aspects of the life-cycle and (automated) operational model for Cloud computing. While there are many different flavors of Cloud fabric from vendors, it is important to keep in mind the general context that drives the features in to the Cloud fabric: The “Cloud fabric” must support, as transparently as possible, the binding of an increasing variety of Workloads to (varying degrees of )Dynamic infrastructure. Of course, vendors may specialize in fabric support for specific types of Workloads, and with limited or no support for Dynamic infrastructure. By Dynamic infrastructure, I mean that it is not only “elastic”, but also “adaptive” to your deployment optimization needs.

That means your compute, storage and even networking capacity is sufficiently “virtualized” and “abstracted” that elasticity and adaptiveness directly address application workload needs (as transparently as possible — for example is the PaaS or IaaS provider on converged data center networks or do they still have i/o and storage networks in parallel universes?). If VM’s running your application components can migrate, so should storage, and interconnection topologies (firewall, proxies, load balancer rules etc) , seamlessly – and from a customer perspective, “transparently”.

Somewhere all of this virtualization meets the “un-virtualized” world!

Whether you are a PaaS/IaaS, “Cloud-center” or “Virtual private Data center” provider etc, variations in the Cloud fabric capabilities stem from the degree of support across both these dimensions:

- Breadth of Workload categories that can be supported. E.g. AWS and GoogleApp platform support is geared towards workloads that fit the Shared-nothing, distributed computing model based on Horizontal scalability using OSS, commodity servers and (mostly) generic switch network infrastructure. Keep in mind, Workload characterization is an active research area, so I won’t pretend to boil this ocean (because I am not an expert by any stretch of imagination), but just represent the broad areas that seem to be driving the Cloud business models.

- How “Dynamic” is the Cloud infrastructure layer, in supporting the Workload categories above: Hardware capacity, configuration and general end-end topology of the deployment infrastructure matters, when it comes to performance. No single Cloud Fabric can accommodate the performance needs of any type of Workload, in a transparent manner. E.g. I wouldn’t try to dynamically provision vm’s for my Online gaming service on AWS unless I can also control routing tables and multicast traffic strategy (generally, in a distributed computing architecture, you want to push the processing of state information close to where your data is. So in the case of Online gaming use case, that could mean your multi-cast packets need to reach only a limited set of nodes that are in the same “online neighborhood” as the players). Imagine provisioning vm’s, storage dynamically for such an application across your data center without any thought on deployment optimization. Without an adaptive infrastructure under the covers, an application that has worked perfectly in the past might experience serious performance issues in the Cloud environment. Today, PaaS programming and deployment model determines the Workload match.

So, in general, Cloud fabric should support Transparent scaling, Metering/Billing,”Secure”, Reliable and Automated management, Fault tolerance, Deployment templates and good programming/deployment support, “adaptive” clustering etc, but specific Cloud fabric features and Use Case support depends on where your application is, at the intersection of the Workload and need for Dynamic/adaptive Infrastructure. Here’s what I mean…

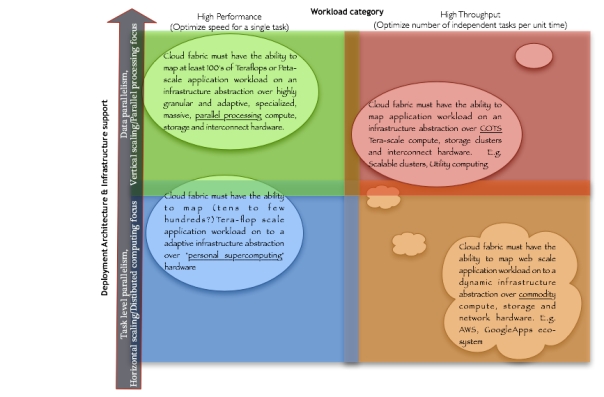

Example of functional capabilities required of Cloud fabric based on Workload type and Infrastructure/deployment needs

Let me explain the above table: Y-axis represents a continuum of the deployment technologies & infrastructure used, to support “Task level parallelism” (typically, thread parallel computation where each core might be running different code) at the bottom to “Data parallelism” (typified by multiple cpu’s or cores running the same code against different data) at the top. X-axis broadly represents the 2 major Workload categories:

- High Performance workloads: where you want to speed up the processing of a single task on a parallel architecture (where you can exploit Data parallelism e.g. Rendering, Astronomical simulations etc)

- High Throughput workloads: where you want to maximize the number of independent tasks per unit time , on a distributed architecture (e.g. Web scale applications)

Where your application(s) fall in to this matrix determines what type of support you need out of your Cloud fabric. There’s room for lots of niche players, each exposing the advantages of their unique choice of the Deployment Infrastructure (how dynamic is it?), PaaS features that are friendly to your Workload.

The above diagram shows some examples. Most of what we call Cloud computing, falls in to the lower right quadrant, as many vendors are proving the viability of this business model for this type of Workload (market) . Features are still evolving.

Of course, Utility computing (top right) and “Main-frame” class, massive parallel computing (top left) capacity has always been available for hire for many, many years. What’s new here is how this can be accessed and used effectively over the internet (along with all that it entails: simple programming/deployment model, manageability, friendly Cloud fabric to help you with all of this in a variable cost model that is the promise of Cloud computing). Vendors will no doubt leverage their “Grid” management framework.

Personal HPC (bottom left) is another burgeoning area.

Many of these may not even be viable, in the market place..that will be determined by Market demand (ok, may be with some marketing too).

Hope this provides a good framework to think about the Cloud Fabric support you need, depending on where your applications fall in this continuum. I’m sure I might have missed other Workload categories, I’d be always interested in hearing your thoughts and insights, of course.

So, what color is your Cloud Fabric?